This startup acquisition announcement does not exist

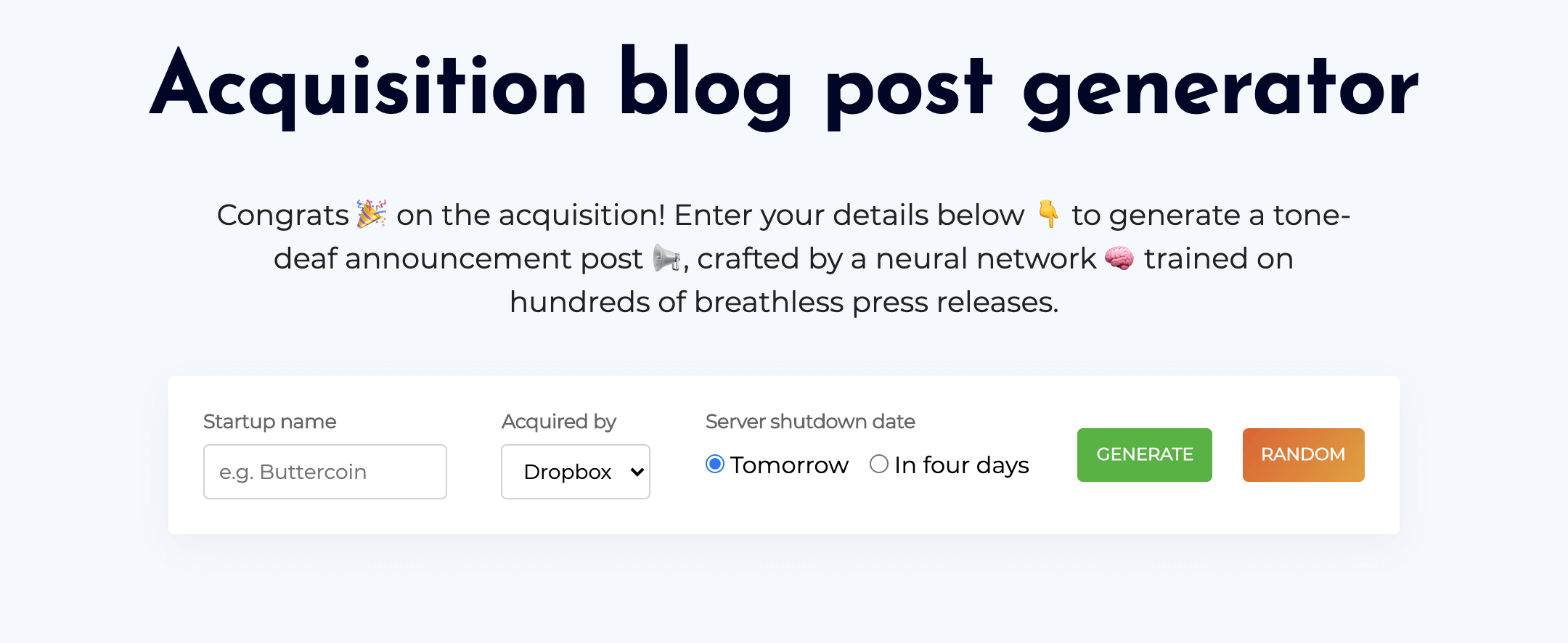

I built this website for generating startup acquisition announcements.

You can't go on a mission without doing something.— 🤖

Why

I’ve been following Our Incredible Journey, which collects announcement posts from startups that have been acquired by mega tech companies. In each post the founders struggle to contain their excitement and express endless gratitude to their users.

But, inevitably stuffed into the last paragraph of the blog post is the announcement that the service is being shut down in like a week even though the founders are millionaires now on the back of their users’ loyalty.

The tone is totally inappropriate for the message. In fact, the posts are so formulaic and impersonal that they may as well be generated.

It's been a while since we've had a good idea of what we're doing— 🤖

Dataset

First I needed a dataset of announcements to train on. Copy-pasting each article from Our Incredible Journey into a text file saved me from doing a bunch of time-consuming automation. I ended up with 174 blog posts.

I removed personal details (names, phone numbers etc), which were getting regurgitated by overly-retentive AI. I replaced the startup name, buyer name, and shutdown date with tokens. Finally, I split each post into three parts: the title, post body, and signature.

9 of the 174 posts contain the text “incredible journey”. 2 misspell “acquired” as “aqcuired”, which is a much better success rate than I achieved in the first draft of this blog post

I am a very small business owner and I am extremely excited about the future of our business.— 🤖

Modelling

Next, I needed three models to generate text: one for each of the post title, post body, and signature. Because each post has a similar subject and tone, these models could be independent.

I started off using a Markov model. They were all the rage 10 years ago for generating Kanye West lyrics, but the results were poor when applied to long-form data like blog posts: they just don’t make sense and are clearly non-human:

We will be more excited to join $buyer_name as they always been an incredible journey and we hope you continue to get their hard at $buyer_name to help make the transition easier by doing.

There’s been a lot of buzz around GPT-2 for text synthesis, and after giving it a shot I can see why: it works exceedingly well, heaps better than I had expected, with very little tweaking needed. The generated sentences are coherent, and the model really captures the overall structure of typical blog posts (we’ve been acquired! → our buyer is the greatest → we’re shutting down the servers → thanks users and investors).

I wanted to fit the model enough be able to parrot back the in the style of repetitive blog posts, but not so much that it would store actual phrases from the training data. I used the 335M model, fine-tuned for 100 steps at a learning rate of 5e-5, then generated posts with a fairly high temperature of 1.2. This notebook by minimaxir made everything much easier.

Results were largely excellent, however there was a lot of drivel too. Personal information:

If you still wish to access your data please call [redacted phone number of a car mechanic in Florida].— 🤖

possible self-awareness:

I am a young man and I am a woman— 🤖

Oh, my God, my God, my God, my God, my God, my God, my God, my God, my God, my God, my God.— 🤖

but mostly just junk:

<\/thetitle><\!-- * * * * * * * * *— 🤖

Because my AI clearly can’t be trusted to run unattended I generated a few hundred samples for the title, body, and signature, and manually removed samples that were rubbish.

The time has come for $company_name to stop being a joke.— 🤖

Startup names

While the posts and signatures are generated by NNs at the world level, I couldn’t get one to work well enough to generate startup names at the character level. Instead, I drew names from three different sources:

- thisworddoesnotexist.com, a GPT2-powered word generator.

- A list of acquired/defunct Y-combinator startups.

- Some business name generator website.

See if you can guess which name comes from where.

I'm going to be a little bit of a little bit of a jerk.— 🤖

Permalink

I wanted users to be able to share posts. I’ll never pass up an opportunity to build a stateless webapp, so I put the information about each post into the url.

Each post has 6 pieces of state:

- Startup name

- Server shutdown date

- Title id

- Post body id

- Signature id

- Buyer company id

The startup name is user-entered text; the other 5 are stored on the server so IDs can be used instead of the full text.

The first algorithm I tried was to concatenate everything together and base62 encode it:

# Format: title_id|text_id|sig_id|shutdown_date|buyer_id|startup_name

decoded = '23|391|8|20200329|3|SwapBox'

encoded = base62.encode(decoded) # c7vnDXtVUknTgWIKNOzXYl4Lup7VddJ68WfA

It worked but was a bit long. Encoding the integers as strings isn’t the most efficient, and using base62 IDs would have been a pain with the storage format I was using for the text server-side. So I made a few changes:

- Store the date without the millennium (

2020-03-29becomes200329), doing my bit to contribute to the Y3K problem. - The integer IDs get zero-padded then concatenated into a single integer:

0230039108200329. This 8 byte integer encodes to just 10 characters in base62, rather then 20 as above. - The integer bytes and the startup name string bytes are concatenated. Because everything is fixed-width, it’s easy to reverse the process to decode the IDs.

- For the startup name I tried a few libraries for compressing short strings like shoco and smaz but they didn’t improve compression ratios for the strings I was testing.

- Finally, the data is encrypted before base62 encoding, reducing the amount of user-input I have to validate. The version of pycrypto on Google App Engine doesn’t support authenticated encryption like GCM AES, so I rolled my own using AES + HMAC.

The resulting URLs are much shorter.

This morning, we have been informed by the Federal Bureau of Investigation we will be shutting down our website.— 🤖

Stack

The good: webapp2, homemade html+css.

The bad: Python 2.7, Google Analytics.

The could-be-deprecated-any-day-now: Google App Engine legacy runtime.

There, I said it. All the while staying true to myself: "This isn't about building great products anymore."— 🤖

Open source

I finished the app and this blog post a few months ago, but it’s sat in my drafts folder waiting until I had time to make the code opensourceable. For me that means adding documentation, fixing security holes presently covered by a thin tea towel of obscurity, removing hard-coded ops secrets, and generally raising quality up to the level of my other public code.

In the interest of getting shit done I’m publishing this anyway, sans source, before the GPT-3 model weights are released and I have to rerun everything and it won’t be as funny because the grammar will be too good.

The repo is a bunch of training data I probably don’t have the right to distribute, run through an open Google Collab notebook that I barely changed, served by a likely-insecure crud webapp.

If you'd like a copy of your data, please let us know. We'll happily share it with the world.— 🤖

The end

We couldn't find a better way to end this chapter— 🤖